The Real Cost of Running AI in Production: What Teams Need to Know

Understand the cost of running AI in production and how to cut expenses across infrastructure, inference, and MLOps.

Running AI models in production isn’t just about keeping servers online—it’s about managing an evolving system with complex infrastructure, real-time demands, and long-term maintenance. Yet, most teams only plan for the training phase and overlook the ongoing cost of running AI in production. That’s a mistake.

This post breaks down where those costs come from, what makes them balloon, and how to reduce them smartly—without compromising performance or reliability.

Understanding the True Cost of Running AI in Production

Many developers focus on model development and training, but the real expenses appear after deployment. Once a model is live and serving predictions to users or systems, the cost of running AI in production starts to pile up—sometimes fast, and often silently.

Key cost areas include:

- Inference compute – every prediction made by your model costs resources.

- Cloud infrastructure – servers, storage, networking.

- MLOps – engineering time, automation tools, monitoring, retraining.

- Data pipelines – real-time data ingestion and preprocessing.

- Model updates – ongoing improvements and bug fixes.

Unlike training, which is often a one-time or periodic effort, running AI in production is continuous. You’re now operating software that learns, evolves, and sometimes breaks in unpredictable ways.

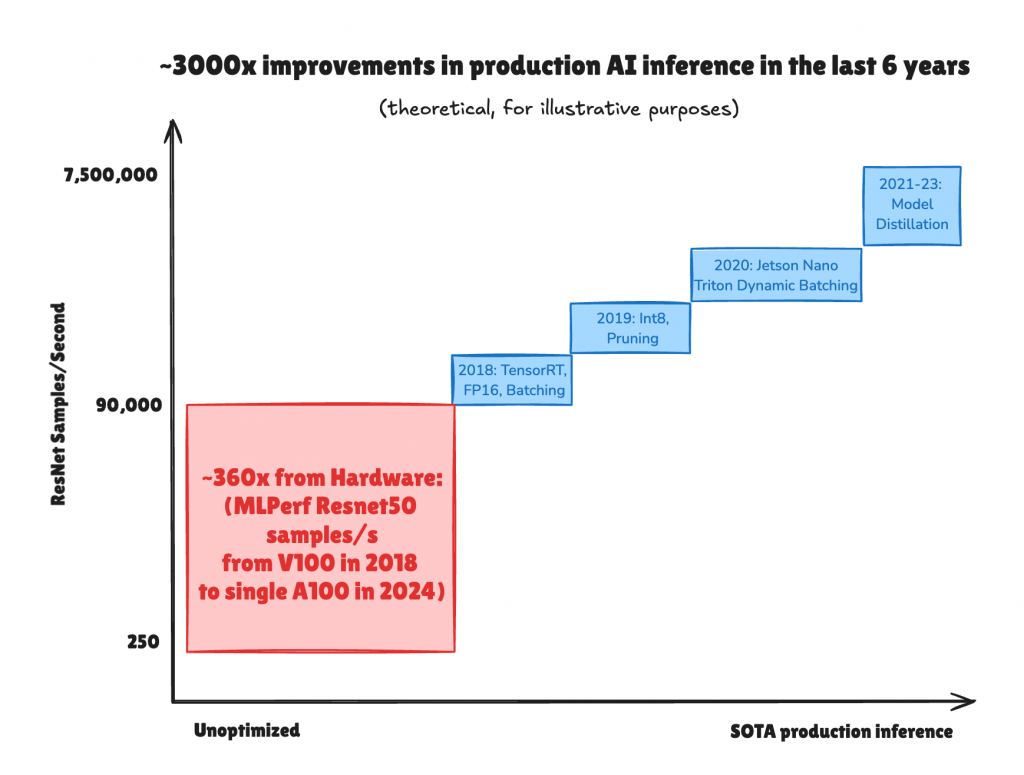

Inference: The Quiet Budget Killer in AI Production

Source: 2024, Efficiency is Coming: 3000x Faster, Cheaper, Better AI Inference from Hardware Improvements, Quantization, and Synthetic Data Distillation, Latent space, available at: https://www.latent.space/p/nyla (accessed 31 March 2025)

Inference is the heartbeat of any deployed model. Whether you’re offering AI-powered recommendations, fraud detection, or chatbot responses, your model performs inference every time a user interacts. And each of those predictions costs money.

The cost of running AI in production increases sharply with traffic volume. A model that serves 1,000 predictions per day might cost only a few dollars per month. But scale that to 10 million predictions a day, and suddenly you’re paying thousands—especially if you rely on GPU-accelerated instances.

Models like high-complexity GPT, BERT, or image classifiers use significant computation per inference. Teams can cut costs using:

- Model distillation to create smaller, faster models.

- Quantization to reduce model size and inference latency.

- Batching to process multiple inputs at once.

If you’re not optimizing for inference, you’re burning money with every prediction.

Infrastructure Costs: Cloud vs On-Prem in AI Deployment

Choosing where to host your model directly impacts the cost of running AI in production. Most teams start with cloud providers like AWS, GCP, or Azure for speed and flexibility. But cloud costs can escalate quickly—especially when running 24/7 services or scaling globally.

Cloud advantages:

- Fast setup, scalability

- Access to specialized hardware (GPUs, TPUs)

- Managed services for deployment and monitoring

Cloud disadvantages:

- Ongoing compute charges

- Data egress fees

- Costly long-term usage

On-premises or hybrid solutions can lower the long-term AI infrastructure costs, especially for large-scale, stable workloads. But they come with upfront hardware costs, staffing requirements, and longer setup times.

To control costs, many companies move to a hybrid model—prototyping in the cloud, then migrating steady workloads on-prem once traffic and performance requirements stabilize.

MLOps and Maintenance: The Silent Majority of Costs

Getting a model into production is just the start. Keeping it working correctly—without drift, bias, or performance drops—requires ongoing work. This is where MLOps (Machine Learning Operations) comes in.

And it’s often the largest hidden component in the cost of running AI in production.

Here’s what adds up:

- Monitoring for model drift – data and predictions can change over time.

- Retraining pipelines – to refresh models as new data comes in.

- CI/CD for models – automating deployments and version control.

- Logging and alerting systems – to detect and respond to failures.

- Security and compliance – especially in regulated industries.

Each of these systems requires tools, cloud resources, and—most importantly—engineering time. For every production model, expect to budget for at least one part-time or full-time engineer to manage the lifecycle.

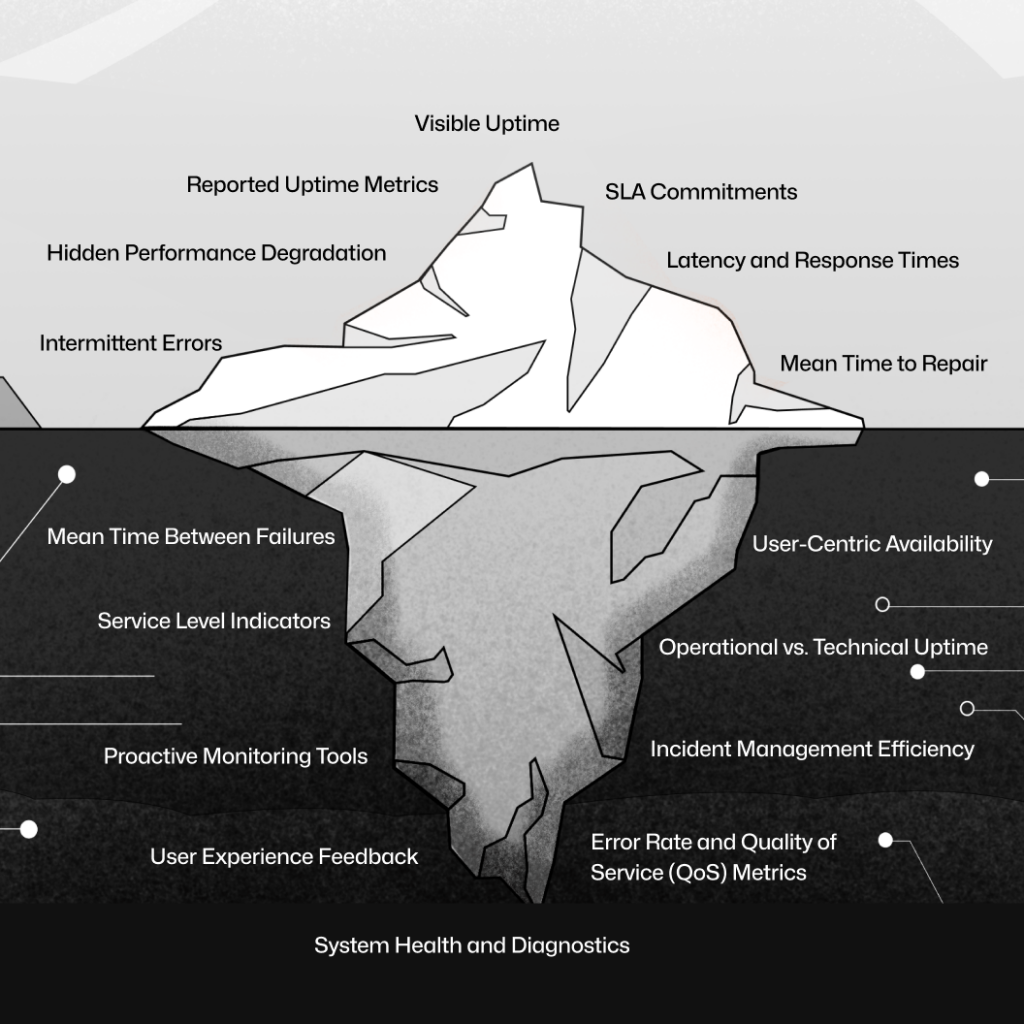

Latency, Uptime, and SLA Pressures

Source: 2025, Uptime vs. Availability: Impact on SLAs, zenduty, available at: https://zenduty.com/blog/uptime-vs-availability/ (accessed 31 March 2025)

The cost of running AI in production also depends on how critical your model is to the product experience. If you’re deploying a recommendation engine or fraud detection system, every second counts—and downtime can cost more than compute.

To meet service level agreements (SLAs) or internal latency goals, teams must invest in:

- Low-latency architecture – including high-performance hardware.

- Load balancing and autoscaling

- Global distribution and redundancy

- Failover mechanisms – for zero-downtime deployments

All of these infrastructure choices increase reliability—but they also increase cost. Balancing performance with affordability is one of the toughest challenges in scaling AI systems.

Case Example: Deploying NLP at Scale

Let’s say you’re a startup building a customer support chatbot using a transformer-based NLP model. Here’s a ballpark breakdown of the cost of running AI in production:

- Training: $10k+ (one-time)

- Inference: $3k/month (based on usage)

- Cloud infrastructure: $1k–$5k/month (compute, storage, network)

- Monitoring and MLOps tools: $500–$2k/month

- Engineering time: 1 FTE at $120k/year

Even with an open-source model, your ongoing cost can range from $5k to $10k+ per month, depending on how many users you serve and how optimized your system is.

This example shows why early planning is crucial. If you underestimate the cost of inference, data handling, and monitoring, your model could become too expensive to sustain.

How to Reduce the Cost of Running AI in Production

The good news: most teams can reduce the cost of running AI in production with a few key strategies.

- Optimize the model architecture – use smaller or more efficient models like MobileNet or DistilBERT where possible.

- Use autoscaling wisely – scale up during traffic spikes, but don’t pay for idle compute.

- Leverage serverless AI offerings – pay only for what you use.

- Compress models for faster inference – using tools like ONNX or TensorRT.

- Set usage limits and quotas – to avoid surprise spikes in costs.

- Benchmark performance regularly – and tune your deployment accordingly.

Most importantly, treat cost as a first-class citizen in your AI architecture decisions—not an afterthought.

Final Thoughts: Plan Ahead or Pay Later

The cost of running AI in production isn’t just about compute—it’s about the entire system: infrastructure, talent, tools, and operations. Many teams rush to deploy AI features and find themselves stuck with brittle systems that are expensive to maintain and hard to scale.

Planning early, optimizing aggressively, and understanding where the real costs lie can save you thousands—if not millions—over time.

Related Articles

Feb 03, 2026

Read more

How Claude Code Is Changing How Anthropic Builds Software

Claude Code is Anthropic’s AI coding tool, transforming how engineers work and shaping the future of AI-powered software development.

Jan 27, 2026

Read more

Why So Many See an AI Bubble Emerging

Is the AI bubble real or just hype? A clear look at why AI feels overheated, how tech bubbles form, and what history suggests comes next.

Jan 20, 2026

Read more

The Problem With AI Image Safeguards

The technical limits of AI image safeguards are becoming clear as image tools spread, revealing why abuse and misuse are so hard to stop.

Jan 13, 2026

Read more

How to Choose the Right AI Tool in 2026 (Without Wasting Money)

Not sure how to choose the right AI tool in 2026? This guide helps you avoid hype, save money, and pick tools that actually fit how you work.

Jan 06, 2026

Read more

Best AI Tools for 2026: What’s Actually Worth Using

The best AI tools for 2026 aren’t the most hyped ones. This guide cuts through the noise to highlight AI tools that actually earn their place.

Dec 30, 2025

Read more

Best Microlearning Apps for 2026: Learn New Skills in Minutes

Best microlearning apps to grow your skills quickly, fit lessons into your day, and make learning simple and effective.